Understanding the Cloud Native Landscape

August 1, 2024

Read time: 10 mins

The Cloud Native Computing Foundation (CNCF) is a non-profit organization fostering a healthy ecosystem of technologies for building and running cloud-native applications that are designed to specifically leverage the benefits of cloud computing. Major banks, gaming, AI, transportation, insurance and other companies are using them to strengthen the fabric of their infrastructure platforms that are built using microservices architectures, deployed in containers, and managed using orchestration tools. This approach offers several advantages when following the right guidelines.

Scalability

Cloud-native applications seamlessly scale up and down to handle varying workloads, ensuring optimal resource utilization. This will work even when operating at planet scale, as long as your architecture is built with real world cloud provider limitations in mind.

For example, think Tax Day, Cyber Monday, or popular gaming events with massive ad-hoc traffic requirements of additional hundreds of thousands of requests per minute until a ceiling is reached to accommodate millions of concurrent users. Those scenarios are best satisfied when your API gateways are straddled across multiple Kubernetes clusters with appropriate pre-allocation of additional worker nodes, and ensuring provider side Kubernetes control plane scaling. This will allow swift distribution of the incoming traffic to internal services that do the heavy lifting to satisfy the individual requests.

Resilience

Built with fault tolerance, microservices can quickly self heal and recover from failure, minimizing downtime, when written with circuit breakers, timeouts, retries, fallbacks, isolating components, health checks, failovers, and event based communication. Defining microservice contract terms goes a long way to set proper expectations.

Speed and Agility

Fast development and deployment cycles allow for rapid innovation and response to market needs. This is best supported in a culture of frequent production rollouts multiple times each day with a low number of respective code repository commits, and using canaries to quickly inform about unexpected behaviors. With proper roll back branches potential issues can then be fixed without customers being impacted for more than it takes to revert a broken deployment.

Cost Efficiency

Pay-as-you-go cloud models can reduce infrastructure costs especially when building new services with evolving requirements. Once the sustainer infrastructure footprint is well understood, there are further cost optimization opportunities including to leverage traditional data centers and bare metal hardware.

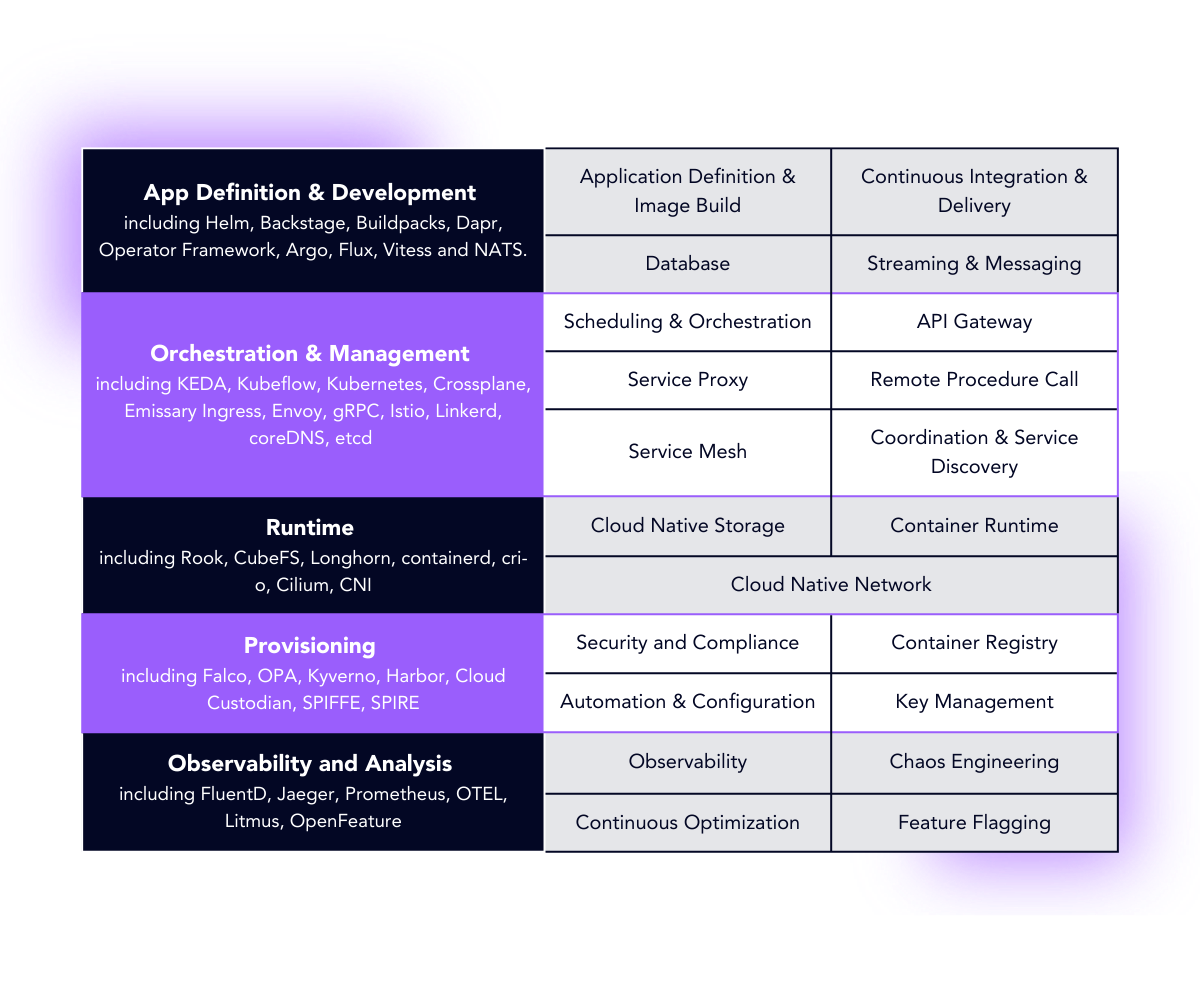

The CNCF structures its landscape of 190 projects as follows. The table below includes some graduated and incubating projects for your inspiration. Exploring the broader set of CNCF projects is well worth your time when considering their use to benefit from the advantages outlined above.

Now that we know about a couple of technical reasons that make the use of CNCF projects appealing, let’s take a look at a relevant subset of projects for real world scenarios. Your current and next projects will likely need to orchestrate compute resources, facilitate secure communications between your services, help you build, package and deploy service updates, and enable you to manage your infrastructure resources in a frugal cost effective manner that is also compliant with regulations and corporate guidelines.

Scheduling and Orchestration

Tools like Kubernetes automate the deployment, scaling, and management of containerized applications. Serverless and managed Kubernetes abstractions like AWS Fargate usually fit more narrow use cases due to limitations such as not being able to run privileged containers, and restrictions to number of open files and memory consumption. Kubernetes on the other hand is a flexible foundation for your container orchestration when you want to keep options open. Kubernetes clusters in concert across cloud accounts can enable applications to support hundreds of millions of end users.

All this flexibility comes at a price. Organizations struggle with Kubernetes cluster configurations. Check out this Fairwinds Kubernetes Benchmark Report 2024: Security, Cost and Reliability Workload Results for guidance on what to look out for and improve. Quote from the report: 28% of organizations with more than 90% of workloads running with insecure capabilities. When using CNCF orchestration and management tooling, such as Crossplane to create and maintain your Kubernetes clusters, explore which configuration aspects to infer to run your clusters and workloads more securely.

Organizations will benefit from de-risking and accelerating Kubernetes version upgrades to improve the cluster security pasture and offering the latest features to their developers who will then be able to innovate faster. Explore Chkk.io to get ahead.

Container Runtime

These tools manage the container lifecycle, including container creation and execution. Examples include Docker Engine and containerd. By including application dependencies in the containers, and leaving environment configuration consumable from the outside, enterprises make them portable across environments and accounts, which increases their flexibility.

Service Mesh

Service meshes provide programmable application aware networking, traffic insights, and security features for containerized applications. Linkerd and Istio are popular service meshes.

When building scalable cloud-native platforms, there are architectures that can get away without a service mesh for some time which will remove maintaining, upgrading and fixing functionality that is not yet needed. However, when building application discovery, service meshes complement high scale architectures through consistent configuration of envoy or micro proxies and the security of the data plane, networking policies, and resiliency primitives such as retries, failovers, and circuit breakers, along with solid plans for canaries and thoroughly tested service mesh software upgrades.

Application Definition & Image Build

Tools like Helm simplify the packaging and deployment of applications within Kubernetes environments. Businesses benefit from a consistent deployment approach and from built in capabilities to swiftly roll back to the last good state when things go wrong.

The merit of this capability is super helpful in combination with properly established git roll back branches. It has been great for solving issues that were only found after changes went out to live production. The benefit is the ability to fix issues while only a small subset of customers was impacted briefly.

Beyond these core categories, the CNCF landscape offers a wide range of tools for building and managing cloud-native deployments. Let's delve into some of these essential tools.

Infrastructure as Code (IaC) Tools

Tools like Ansible, Terraform, and Pulumi enable you to define and manage your cloud infrastructure in code. This code-driven approach ensures consistency, repeatability, and reduces the risk of manual errors during infrastructure provisioning and configuration. When teams start with automation, a first step is often a script. Scripts grow and eventually evolve into applications over time, and then into an interdependent web of tools and services.

Standardizing on IaC tooling has helped me accelerate platform adoption. I eventually experienced the limits of Terraform for provisioning and upgrading Kubernetes clusters. It was time consuming to use a Terraform workspace per cluster for hundreds of clusters during an emergency fix rollout due to the time it took to plan and apply the changes. Conversely, using one or a small number of Terraform workspaces for many clusters and multiple cloud accounts increased the impact blast radius when the state file was corrupt and needed to be manually corrected. Terraform state drift adds work ahead of applying new changes when other modifications were made outside of Terraform, such as adding a network peering configuration to a VPC.

Crossplane

This open-source project takes cloud-native development a step further. It provides a framework for building cloud-agnostic APIs. Imagine creating an internal "Cloud API" experience within your organization. Crossplane allows you to define and manage API specifications for interacting with your infrastructure and services, regardless of the underlying cloud provider you're using (AWS, Azure, GCP, etc.). This empowers your internal developers with a consistent API layer, fostering a more self-service approach and accelerating development lifecycles. In addition, Crossplane frequently reconciles desired and actual external resource states, swiftly addressing drift which is common with native Terraform resource management. Crossplane combines the benefits of flexible configuration languages with a cloud-native control plane resource lifecycle management approach.

It is a breath of fresh air to witness reduced cognitive load requirements for developers when using custom APIs of purposefully built internal developer platforms that abstract away much of the complexity introduced by major cloud providers because the cloud providers aim to cater to a broad diverse audience. Crossplane really shines in enabling organizations to build these internal developer platforms with its control plane paradigm and its limitless ability to interface with any resource provider as long as there is a Kubernetes controller that bridges the communication. Coincidentally, the controller is also called a “provider”. For information on how to write your own, please explore this Upjet code generation framework.

Helm

Helm utilizes charts, which are packages containing application code image references, configurations, and deployment instructions. Helm simplifies application lifecycle management, making it easier to install, upgrade, and rollback deployments.

Some platform teams provide developers with a superset of helm chart values that allows the developers to quickly remove aspects that they do not wish to customize, versus thinking about which values to add. This leads to swift deployments with exactly those parameters that matter, and with significantly lower cognitive load requirements. Individual development teams may further reduce the templates to only keep options that are relevant to their team. This approach has increased developer productivity.

ArgoCD & Flux

Both ArgoCD and Flux are open-source tools that provide GitOps-based continuous delivery for Kubernetes applications. GitOps is a declarative approach to managing infrastructure and applications, leveraging Git as the source of truth. ArgoCD continuously monitors Git repositories for changes and automatically deploys them to your Kubernetes cluster based on configurations defined in your Git repository. Flux operates similarly, ensuring your deployments are always in sync with your code and configurations.

ArgoCD and Flux are successfully being used as continuous delivery tools among a broad set of enterprises including Upbound customers to orchestrate the deployment of configuration packages and to submit infrastructure resource claims.

SPIFFE & SPIRE

These tools work hand-in-hand to provide secure service-to-service communication within Kubernetes environments. SPIFFE (Secure Production Identity Framework for Everyone) defines a framework for assigning identities to workloads, while SPIRE implements this framework by providing agents that inject workload identities into containers. Together, they provide a uniform identity control plane across modern and heterogeneous infrastructure with widespread industry adoption and integrations.

Observability

The tools for cloud-native logging, monitoring, alerting and tracing include but are not limited to Fluentd, Elastic, Logstash, Loki, Prometheus, Grafana, Jaeger and Zipkin. They provide insight into the health of the cloud-native infrastructure and applications. Tracing is especially useful for illuminating where data flows between microservices.

Platform and application teams improved the quality of their services through targeted observability implementations, showing on demand dashboards, triggering alerts when metrics experience standard deviations, triggering self healing, pulling up logs related to start and end time series periods, tracing a request through a complicated system of interdependent microservices, and most notably how revenue generation was impacted by deployments. Beyond troubleshooting and gaining insights, platform teams find metrics vital for developing platform service level objectives (SLOs) and offering a service level agreement (SLA) to their development and application team users. Observability can incur significant cost if not planned according to specific budgets. Decide which cardinality is best for which use cases.

Kubernetes

Kubernetes is a cornerstone of cloud-native development, offering features like service discovery, load balancing, and health checks.

Companies may distribute applications across a wide range of clusters, and consider how applications discover each other possibly through a service mesh and service identity domains, and how to position the clusters across multiple cloud provider accounts without exhausting low level cloud provider network resource limits. Scaling the architecture to multiple clusters, accounts and providers adds complexity and may require additional tools, e.g. a Tetrate service bridge for multi-cloud secure microservices communications.

The Power of Cloud-Agnostic APIs with Crossplane

Major cloud providers offer a rich set of APIs for interacting with their infrastructure and services. These APIs provide a programmatic way to manage resources, automate tasks, and gain insights into your cloud environment. Crossplane allows enterprises to achieve similar functionality within their own cloud infrastructure, regardless of the underlying provider.

Here's the parallel.

Major Cloud Providers: Imagine a large department store with dedicated sections for clothing, electronics, furniture, and more. Each section has its own organization, layout, and staff. However, you can still navigate the entire store using a single shopping cart and checkout process.

Crossplane: Crossplane acts like a universal shopping cart for your internal cloud environment. Similar to IaC, it doesn't matter if your resources are spread across different clouds, custom networking, observability, AI/ML and other providers, but with Crossplane, you can define and manage your own APIs that provide a consistent way to interact with them all. This simplifies development, reduces vendor lock-in, and empowers your developers to focus on building innovative applications.

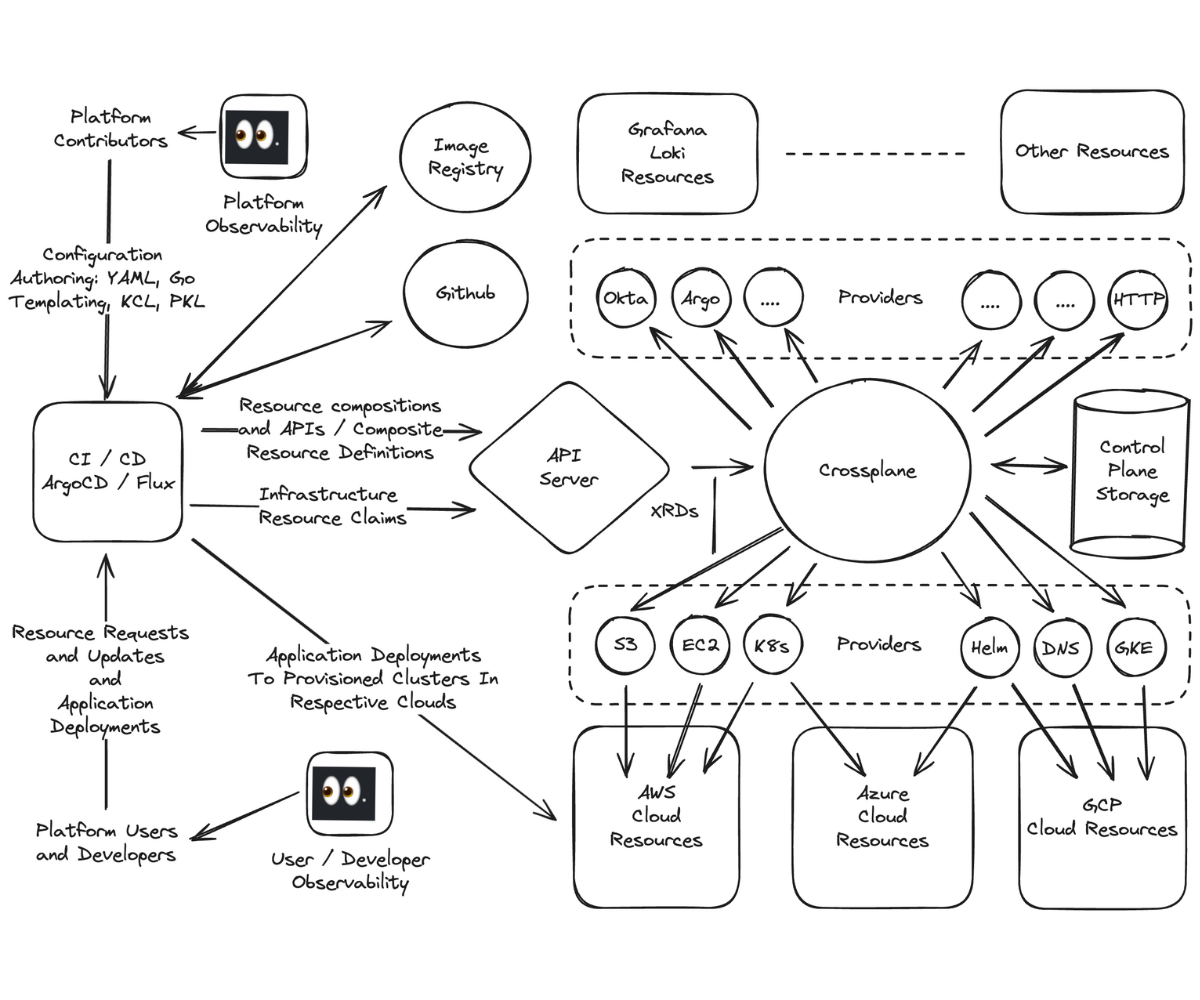

Below is a diagram that shows how platform contributors use the same Argo / Flux tools to apply internal developer platform configuration packages to Crossplane, that platform users and developers are using to submit resource requests to the custom platform API. Those resource requests are routed to Crossplane that in turn interacts with external providers using Kubernetes “provider” controllers. Crossplane’s state is kept in etcd, and backed up so that it may be restored in the rare event of a Kubernetes control plane management cluster being preempted.

The Cloud-Native Tools diagram is a high-level blueprint for how you can put together cloud native tooling including Crossplane to build and operate your own internal developer platform, which can increase your developer experience and innovation velocity.

Building Your Cloud Native Strategy

To understand how to choose the tools to build up your own cloud native tool stack, I wrote all about it in another blog, “Choosing The Right Tools To Craft Your Cloud-Native Strategy.”