From Cloud to On-Prem: Leverage Upbound for Unified Resource Management

December 18, 2024

Read time: 5 mins

Upbound can do on-prem?! Even with OpenStack? Yes! During my tenure at Upbound, I've spoken with quite a number of customers looking for a way to build infrastructure in the cloud. For years, the cost of convenience that the cloud offers has been overall provided value over maintaining in house infrastructure. With the boom in AI, data governance, and rising costs, the swing back to managing on-prem or hybrid architectures is on the rise.

For these reasons, my current customer conversations and those I had at Kubecon Salt Lake City seemed to gravitate towards how Upbound could help with metal, hypervisors, and more. The perception from my conversations was that Upbound did either only hyperscaler provisioning, Kubernetes, or some combination of the two. Let’s dig into creating some on-prem infrastructure and unravel both of those misconceptions using Upbound managing on-prem and cloud resources.

Setup and Context

First, let’s talk about the setup in regards to using Upbound. I needed to first create an account (it’s free), get the up binary installed, and create a managed control plane I called “stack”. Creating a managed control plane is a simple and quick way to get a managed installation of Crossplane to work with. More on getting started with Upbound here.

Next, I decided to go with one of the largest requested on-prem deployments which is OpenStack. Because the test was development related, it seemed spinning up devstack in the cloud to share with my peers was the best way to test. Due to limitations in regards to unavoidable NAT, an unwillingness to create an additional proxy, and limitations in the current openstack provider, I decided to use my own hardware. I spun up one of my servers (yes I'm one of those people), then installed an OS and devstack. After all, we are talking about on premise equipment here, so let’s make it real.

The first step was to install devstack. My setup was really straightforward and my local.conf really didn't drift too far from default. My network in this scenario was flat, so marking endpoints for my overcloud also wasn't needed.

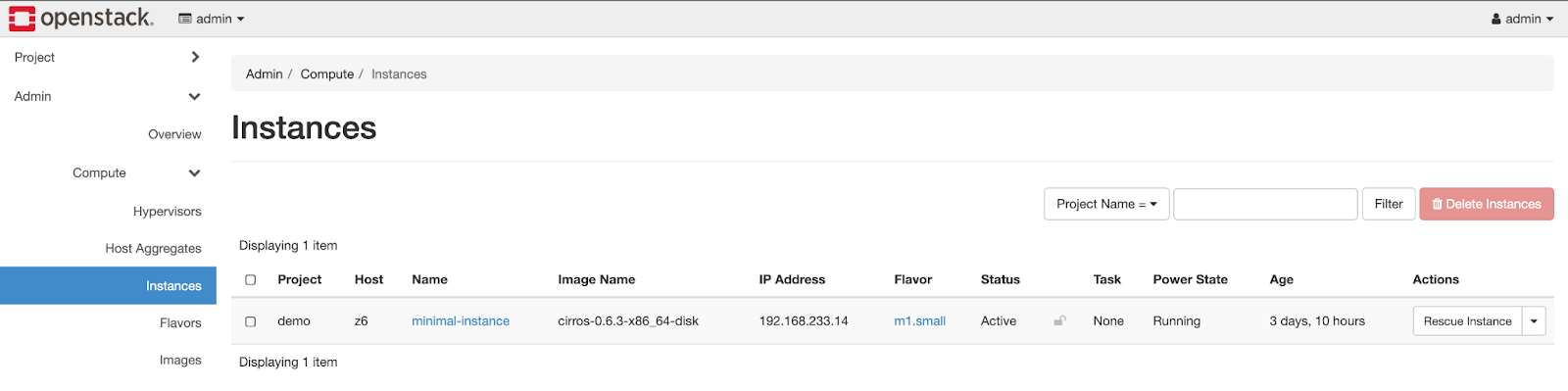

Next, I installed the openstack provider, created a providerconfig, and provided it a secret to the devstack API. Now, it was time to test and see if things worked. I created my first managed resource directly of an instance.

1

Everything looked great in my Upbound dashboard, so just to confirm I went to the Openstack side and confirmed!

Great! Now I have a controller that will continually check the state of what I have defined and reconcile any differences that may occur either desired or not! But let's make this a bit more useful.

Earlier, I mentioned that this isn't solely Kubernetes related or exclusive to hyperscalers, a point I believe we have now demonstrated. So far we created a VM on-prem, but now imagine a scenario where we have both cloud and on-prem resources available. The business has decided that workloads above a certain amount of compute need to be on-prem, and everything else can live in the cloud. We also need to make this process invisible to our developers who don't care about those requirements, and only know they want large or small amounts of compute available to their project. Let's make a composition to do that!

Starting with a simplified composition just for the sake of demonstration and simplicity. We select Azure compute when the computeLoad is set to low and OpenStack when set to high.

1

Now defining an again incredibly simple composite resource definition to wrap an API around the resources in order to expose them.

1

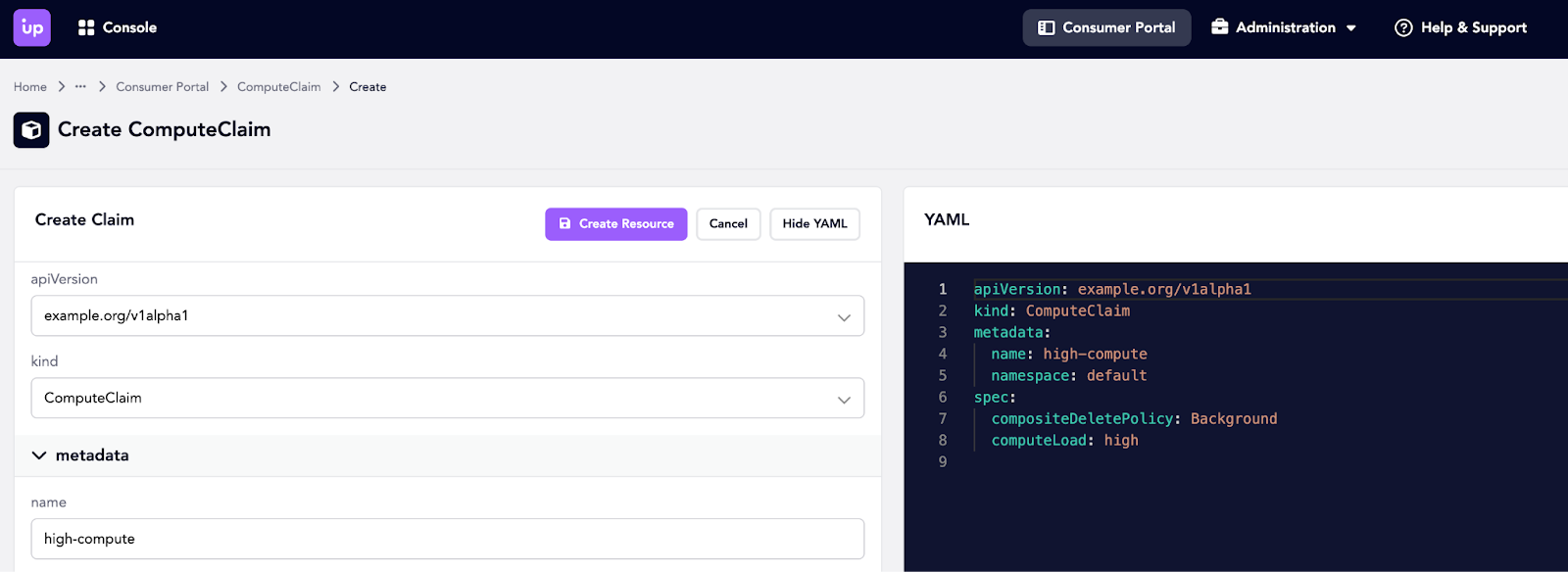

And finally what the experience looks like to consume these, having no knowledge of what is happening behind the scenes. We will make a claim for high compute load which will call for an OpenStack instance defined in the composition from the Upbound consumer portal. The portal is a great way to discover, like in the cloud, the shape of what you would like to create.

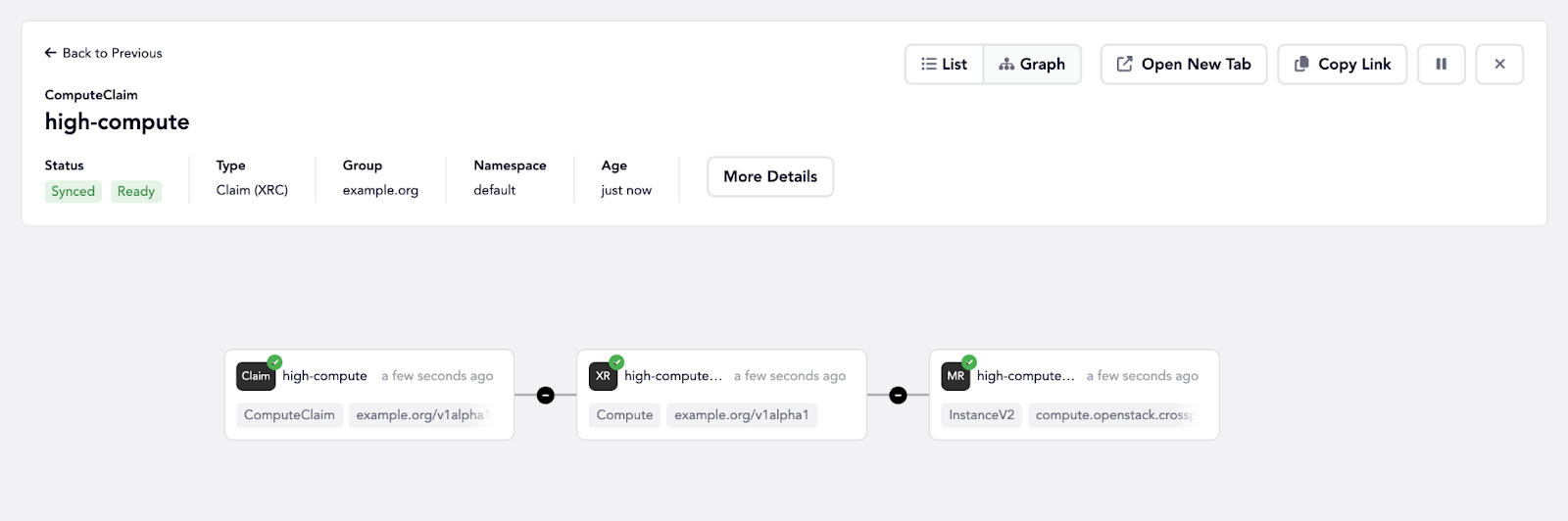

Then the ops team can visualize the creation, and verify everything is running smoothly.

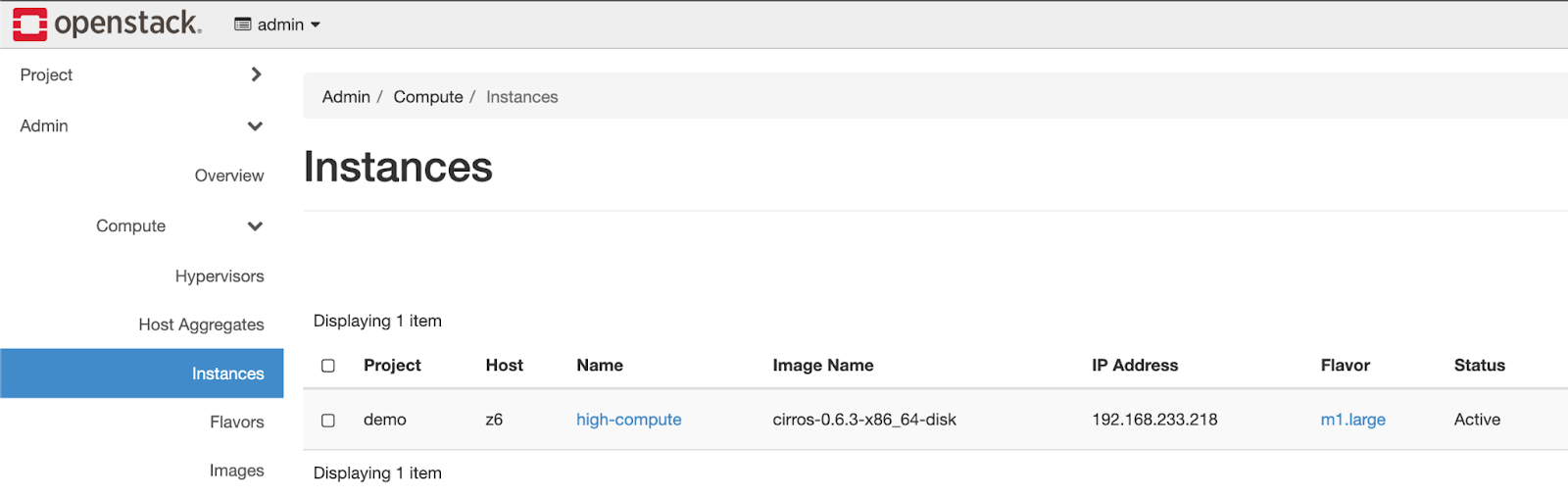

After that claim has been applied, the controller kicks off and starts building the necessary pieces in OpenStack for the new instance.

Challenges and Lessons

To be clear, it wasn't all roses for me. I had to get a good network path as I mentioned before, had some esoteric errors from the provider, and needed to understand how the provider secret needed to be configured to play nice with Keystone. Through a series of packet captures, enabling debug on the provider, and code review I was able to provide the following incantation of a working secret, which varies from examples in from the repo. Hopefully it provides you some value if you too are having issues.

1

Future Directions

Having explored the ability to offer developers the option of creating resources dependent on requirements while making what's behind the scenes opaque, you may be wondering what else could be created? Given Upbound’s stance around taking any API, anywhere, and making it yours, the minimum is just that; an API. I could for example allow for the creation of bare metal through layering in projects like Metal Kubed, Tinkerbell, CAPI, Talos, and more by calling their respective APIs but again making the consumptions of those resources trivial for those consuming them.

If any of this sounds interesting in regard to on-prem, hypervisors, or even bare metal, let us know! We would love to continue the conversation and explore how Upbound can help you declaratively control any of your resources while lowering the cognitive load for your development teams with our platform.