7 Core Elements of an Internal Developer Platform

July 5, 2023

Read time: 7 mins

This article was originally posted on the New Stack by Viktor Farcic and Port.

What does it take to build an internal developer platform? What are the tools and platforms that can make it work? This post will discuss the architecture and tools required to stand up a fully operational internal developer platform. To see the actual steps of setting up the platform, watch this video.

Why Do We Want an Internal Developer Platform?

Platform engineering’s overarching goal is to drive developer autonomy. If a developer needs a database, there should be a mechanism to get it, no matter if that person is a database administrator or a Node.js developer. If a developer needs to manage an application in Kubernetes, that person doesn’t need to spend years trying to understand how Kubernetes works. All these actions should be simple to accomplish.

A developer should be able to accomplish what they need by defining a simple manifest or using a web UI. We want to enable all developers to consume services that will help them get what they need. Experts (platform engineers) will create those services in the internal developer portal, and users will consume them in its graphical user interface or by writing manifests directly and pushing them to git.

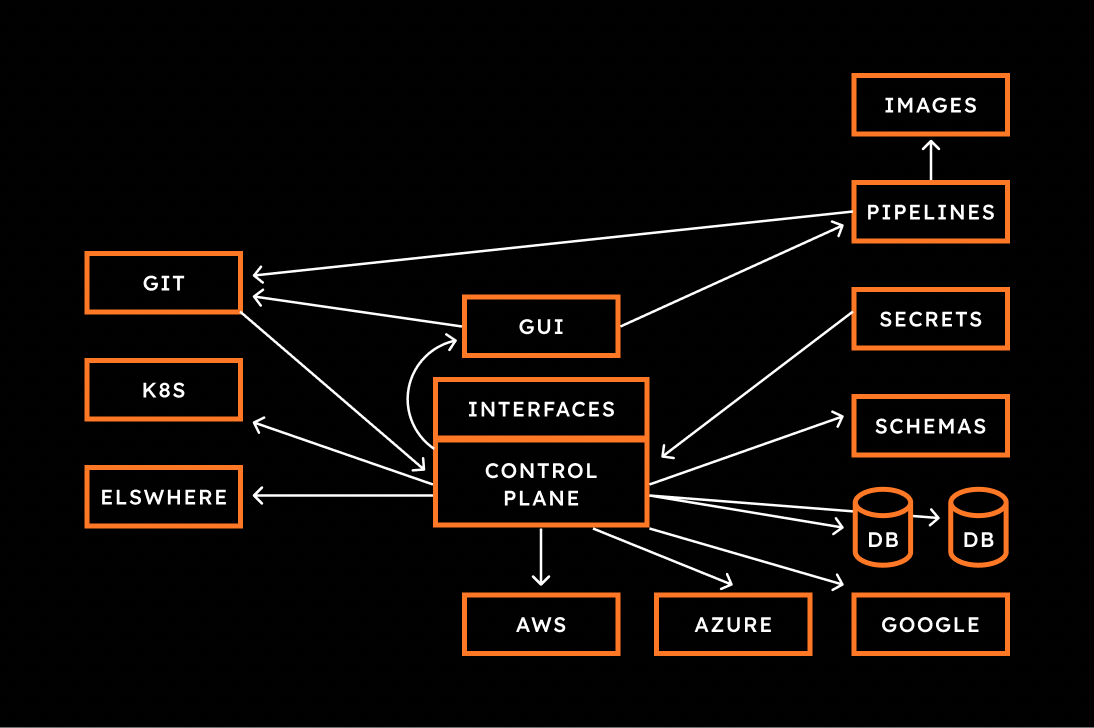

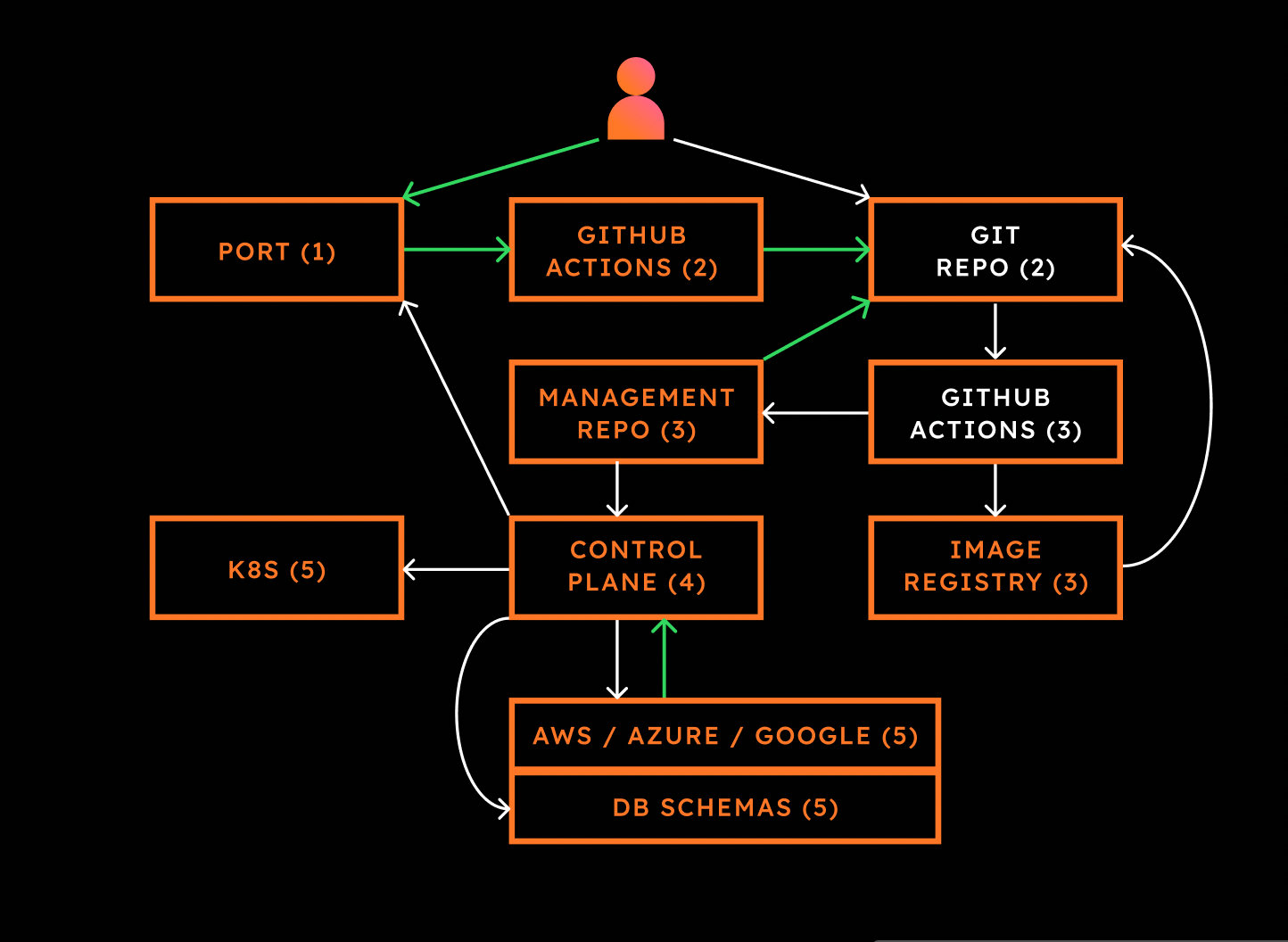

The High-Level Design of an Internal Developer Platform — 7 Core Elements

An internal developer platform needs several parts to become fully operational. For each part we will recommend a tool, but they can be exchanged with similar tools. The core idea is to map out the functionalities needed to build the platform:

- A control plane: The platform needs a control plane that will be in charge of managing all the resources, no matter if they are applications running in a Kubernetes cluster or elsewhere, or if the infrastructure or services are in Amazon Web Services (AWS) , Azure, Google Cloud or anywhere else. Our recommended tool here is Crossplane.

- A control plane interface: This will enable everyone to interact with the control plane and manage resources at the right level of abstraction. Our recommended tool here is Crossplane Compositions.

- Git: The desired states will be stored in git, so we’ll have to add a GitOps tool into the mix. Its job will be to synchronize whatever we put in git with the control plane cluster. Our recommended tool here is Argo CD.

- Database and schema management: Given that state is inevitable, we will need to have databases as well. Those databases will be managed by the control plane but to work well, we will also need a way to manage schemas inside those databases. Our recommended tool here is SchemaHero.

- Secrets manager: For any confidential information that we cannot store in git, we’ll need a way to manage secrets in a secrets manager. Those secrets can be in any secrets manager. Our recommended tool to pull secrets from there is External Secrets Operator (ESO).

- An internal developer portal/ graphical user interface: In case users don’t want to push manifests directly to git, we should provide them with a user interface that will enable them to see what’s running as well as to execute processes that will create new resources and store them in git. Our recommended tool here is Port.

- CI/CD pipelines: Finally we will need pipelines to execute one-shot actions like the creation of new repositories based on templates, building images with new release changes to manifests and so on. Our recommended tool here is GitHub Actions.

The setup will require a few additional tools, but the list above is a must.

The diagram below shows how each of the elements interacts with each other. You can use it as a reference as you read through this article.

Let’s examine the role of each layer in the setup:

Control Plane

Let’s talk about control planes: We need a single API acting as an entry point. This is the main point of interaction for the internal developer platform. In turn, it will manage resources no matter where they are. We can use Crossplane with providers, which enables us to manage not only Kubernetes but also AWS, Google Cloud, Azure or other types of resources. We will use Kubectl to get custom resource definitions (CRDs) that will create deployments, services and manage databases in hyperscaler clusters, etc.

However, this alone isn’t enough for a full-fledged internal developer platform. An application can easily consist of dozens of resources. Infrastructure can be much more complicated than that. Most importantly, all those low-level resources are not at the right levels of abstraction for people who are not Kubernetes or AWS or Google Cloud specialists. We need something that is more user-friendly.

A User-Friendly Interface for the Control Plane

The control plane interface can act as the platform API when you’re 100% GitOps. It shouldn’t be confused with the internal developer portal, which acts as the graphical user interface. We can use Crossplane Compositions for that.

What is the right level of abstraction for the users of the platform we’re building? The rule is that we should hide, or abstract, anything that people don’t really care about when they use the internal developer platform. For instance, they probably don’t care about subnets or database storage. The right level of abstraction depends on the actual use of the platform and will differ from one organization to another. It’s up to you to discover how to best serve your customers and everyone else in your organization.

Crossplane Compositions enables us to create abstractions that can simplify the management of different kinds of applications. Next, we probably do not want anyone to interact directly with the cluster or the control plane. Instead of people sending requests directly to the control plane, they should be storing their desired states in git.

Synchronize from Git with GitOps

Changing the state of resources by directly communicating with the control plane should not be allowed, since no one will know who changed what and when. Instead, we should push the desired state into git and, optionally, do reviews through pull requests. If we plug GitOps tools into the platform, the desired state will be synchronized with the control plane, which in turn will convert it into the actual state.

This is a safer approach as it doesn’t allow direct access to the control plane and also keeps track of the desired state. I recommend doing this with Argo CD, but Flux and other solutions are just as good.

Schema Management

Databases need schemas. They differ from one application to another. To complete our internal developer platform, we need to figure out how to manage schemas, preferably as part of application definitions stored in git. There are many ways to manage schemas, but only a few enable us to specify them in a way that fits into the git model. The complication is that GitOps tools work only with Kubernetes resources, and that means that schemas should be defined as Kubernetes resources as well. This requires us to extend the Kubernetes API with CRDs that will enable us to define schemas as Kubernetes resources. I recommend using SchemaHero for that.

Secret Management

Some information shouldn’t be stored in git. Having confidential information such as passwords in git could easily result in a breach. Instead, we might want to store those in a secret manager like HashiCorp Vault or a solution provided by whichever hyperscaler you’re using. Still, those secrets need to reach the control plane so that processes inside it can authenticate with external APIs or access services, for example, databases. I recommend using External Secrets Operator (ESO) for that.

Internal Developer Portal — Graphical User Interface

The internal developer platform needs a user interface to sit on top of everything we’ve built so far. This is the internal developer portal. It both provides a catalog of services people can

use as well as an interface for developers to perform the actions we want them to use autonomously. Specifically, we need a way to initialize a process that will create new repositories for applications, add sample code, provide manifests for the databases and other dependencies, create CICD pipelines, and so on and so forth.

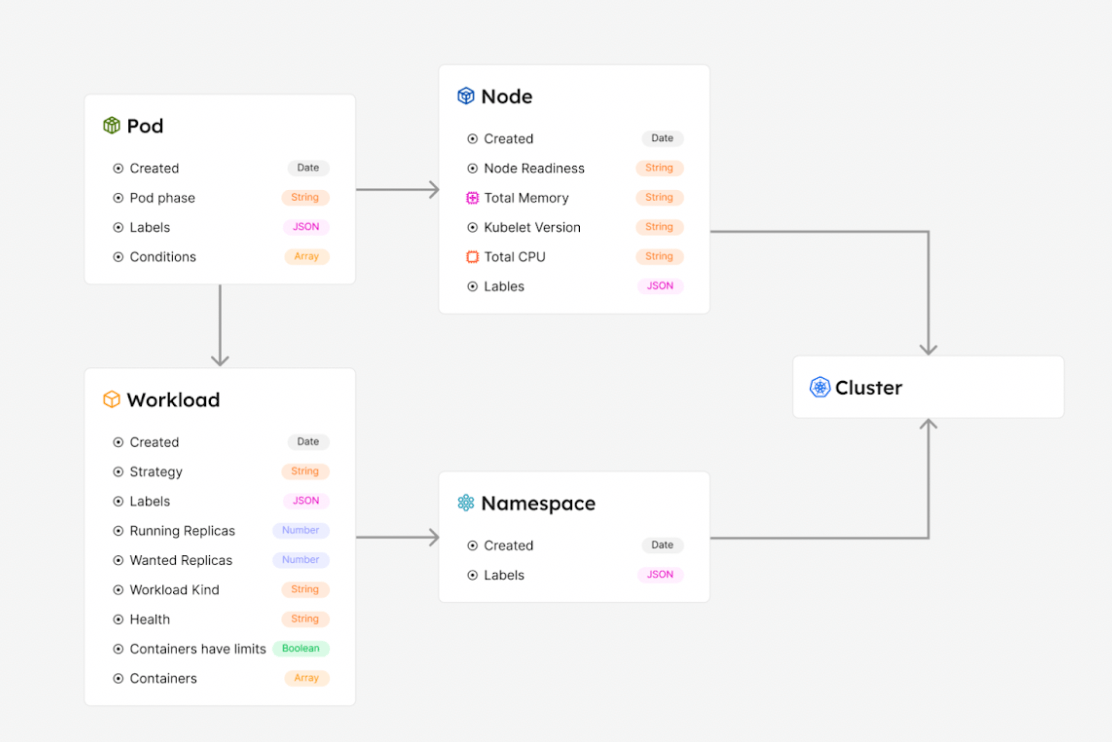

For this setup we began with the Kubernetes catalog template from Port.

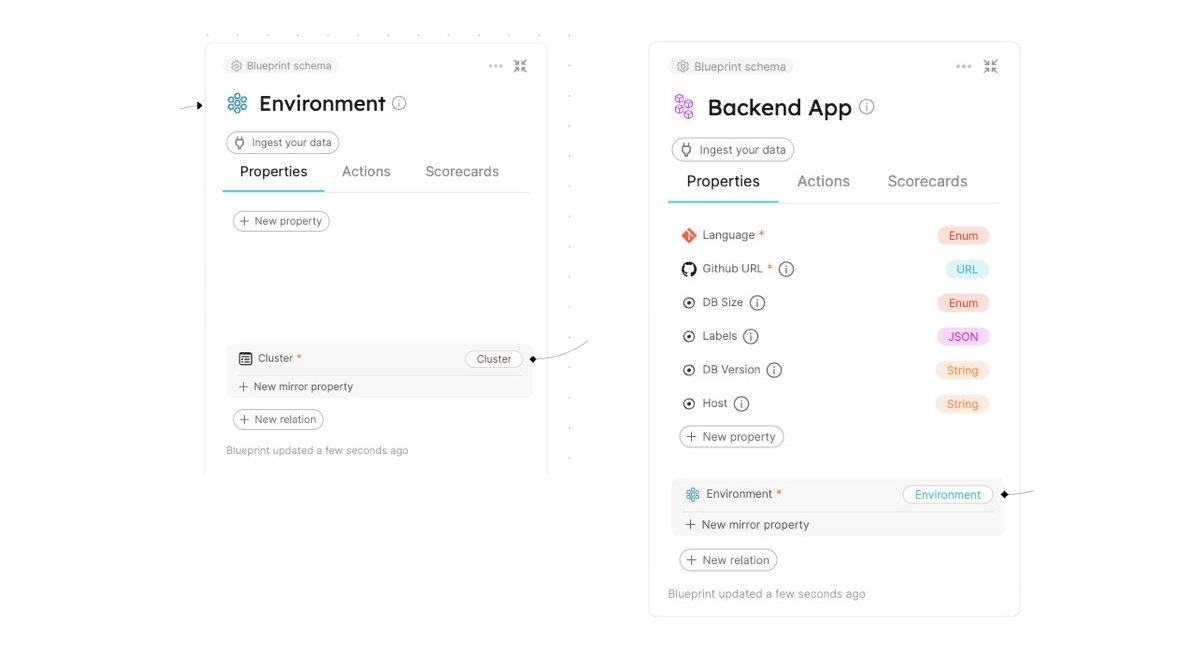

We will then add two additional blueprints that will be related to the cluster blueprint, Backend App and Environment:

CI/CD Pipelines

Finally, we need pipelines. They are the last piece of the puzzle.

Even though we are using GitOps to synchronize the actual state into the desired state, we need pipelines for one-shot actions that should be executed only once for each commit. These could be steps to build binaries, run tests, build and push container images and so on.

The Internal Developer Platform in Action

From the user (developer) perspective, a new application can be created with a simple click on a button in a Web UI or by defining a very simple manifest and pushing it to git. After that, the same interface can be used to observe all the relevant information about that application and corresponding dependencies.

Behind the scenes, however, the flow would be as follows.

- The user interacts with a Web UI (Port) or directly with git. The job of the internal developer portal in this case is to trigger an action that will create all the necessary resources.

- Creating all the relevant resources is a job done by the pipeline such as GitHub Actions. In turn, it creates a new repository with all the relevant files, such as source code, pipelines, application manifests, etc.

- As a result of pushing changes to the application repository (either as a result of the previous action or, later on, by making changes to the code), an application-specific pipeline is triggered (GitHub Actions) which, as a minimum, builds a container image, pushes it to the image registry and updates the manifests in the management repo, which is monitored by GitOps tools like Argo CD or Flux.

- GitOps tools detect changes to the management repo and synchronize them with the resources in the control plane cluster.

- The resources in the control plane cluster are picked up by corresponding controllers (Crossplane), which in turn create application resources (in other Kubernetes clusters or as hyperscaler services like AWS Lambda, Azure Container Apps or Google Cloud Run) as well as dependent resources like databases (self-managed or as services in a hyperscaler).

This article was originally posted on the New Stack by Viktor Farcic and Port.