If you are running in a public cloud, your applications and services are likely consuming numerous managed platform services. While your code is deployed in VMs, containers or functions, it’s likely depending on platform services like databases, message queues, analytics, big data, AI, ML and many others.

What makes these managed services attractive is that you do not have to worry about provisioning, scaling, upgrades, patching, backup, disaster recovery, and a number of other tasks that are required to run a production-grade system. The cloud provider is responsible for the management and they also give you an SLA to ensure the delivery of your services. In return you pay for the management of these services above and beyond the raw hosting costs.

Managed services, however, are typically proprietary and tied to your cloud providers’ platform. Even the managed services based on Open Source Software (OSS) are consumed using proprietary APIs and rely on logic to provision and manage them that is tightly integrated to the cloud provider’s platform. As a result, there has been healthy debate as to whether using managed services leads to vendor lock-in, and whether they should be avoided.

To those that caution against using cloud native managed services for fear of lock-in, you’ve no idea of the cost of your fear.

— Subbu Allamaraju (@sallamar) October 11, 2018

In this blog, we will explore some of these arguments and attempt to make the case that using managed services is a good thing and should not necessarily always lead to vendor lock-in.

What’s the cost of management?

First, it might be helpful to estimate the value being provided by the managed service provider. One way to do that is to compare the cost of managed services to the base cost of compute and storage.

In the following analysis, we compare the cost of running four different managed services in AWS vs. the base cost of compute and storage. We focus on managed services based on OSS to eliminate any commercial licensing cost, and also to give a better comparison to what you’d be saving if you ran these on your own.

For compute, we focused on m4 general purpose machines and looked at on-demand as well as the best reserved pricing (3 year contract, all upfront). The following chart shows the markup of compute above and beyond the cost of comparable virtual machines in EC2.

For RDS the markup ranges from 74% to 83%. For ElasticCache the markup is about 56% although if you choose reserved instances it goes down to 1% (which might be a promotion that AWS is running). For ElasticSearch, the markup ranges from 51% to 93%.

Storage is marked up as well as shown in the following chart for both general purpose storage and provisioned IOPS storage.

For RDS, general purpose storage is marked up 15% and for provisioned IOPS the storage rate is the same but each IOPS-month is marked up 54%. For ElasticSearch it’s a flat 35% markup on storage across the board.

In summary the cloud providers have a healthy margin for running managed services. We leave it up to you to decide if this is too much or too little. It is worth mentioning that this is the high margin part of the cloud provider’s business, and raw compute and storage are high volume but low margins.

Now that we have a rough understanding of the cost, let’s consider the alternatives.

Should you run your own services?

There are numerous reasons why you should run and manage your own platform software instead of using a managed service. We’ll explore a few here.

First, you might not be running on a public cloud provider, or your cloud provider does not have a managed offering for a given platform software you need. In such cases you have no choice but to run them on your own. For example, Microsoft Azure does not currently offer a managed ElasticSearch, although you can install it from the Azure marketplace and run it on your own.

Second, you might need a specific version of a platform service, require a a feature that is not available, or have made modifications to the software that are critical for your business. In such cases, you might chose to run it yourself.

Finally, you might want to control costs. Some companies are sensitive to the cost of hosting, and have the engineering and devops staff to run and manage their own platform services.

What about Commercial OSS Cloud Offering?

There are a number of Commercial Open Source companies that now have cloud offerings. CockroachLabs, Confluent, Elastic, DataStax and others have managed offerings that run on one or more cloud providers, and in some cases have formed business relationships with the cloud providers.

Today we’re launching Managed CockroachDB, the fully hosted and fully managed service created and run by Cockroach Labs that makes deploying, scaling, and managing CockroachDB effortless.https://t.co/78wsfhYDwI pic.twitter.com/hhIBwshBvm

— CockroachDB (@CockroachDB) October 30, 2018

These offering can be a good alternative to running your own managed services. They can run on multiple cloud providers and even on-premise. Some of these commercial offerings tend to have additional features that are not available in the OSS or community version. They can also be a cheaper option than your cloud providers' managed service especially if you consider the markup on storage.

Keep in mind, however, that these are typically independent cloud offerings with their own API and consoles. They generally do not integrate with your cloud provider at the API, Management Console, Monitoring/Observability, Logging, and others.

It’s really great to see commercial open source companies benefit from projects that they’ve created and sponsored for many years. We will cover the dynamics of Commercial OSS and cloud providers in a future blog.

What about Kubernetes Operators?

Over the last few years we’ve seen Kubernetes emerge as the de-facto operating system for clusters and clouds. Kubernetes clusters are being widely deployed in production on-premises and in public clouds. Kubernetes has made a lot of recent advances to support stateful workloads and the community has been moving towards building and running more stateful workloads directly on Kubernetes.

Kubernetes has made huge improvements in the ability to run stateful workloads including databases and message queues, but I still prefer not to run them on Kubernetes.

— Kelsey Hightower (@kelseyhightower) February 13, 2018

Projects like Rook (a project we started, and now a CNCF project) have shown that you can run complex storage systems on Kubernetes and do so with a high degree of automation. However, make no mistake, this is not the same as running managed services. Getting to a high degree of automation is great, but the burden of management is still on the user if you run platform services yourself on Kubernetes.

Platform services are complex and have many failure conditions. We are still far from being able to automate all this complexity. You would still need a team of SREs to manage platform software at scale, or a professional services contract with a company that can support you. Automation reduces the management burden but it will not eliminate it. Also you do not get an SLA.

Automation reduces the management burden but it will not eliminate it.

What about vendor lock-in?

Vendor lock-in happens when you take a dependency on a service or software that is only offered by a single provider, and the cost of switching away from it is high or prohibitive.

Let’s consider the case of an application that requires a MySQL database. Your application is likely going to be compiled with a MySQL client/connector of some sort and will expect a MySQL server to run. The wire protocol, while not an RFC standard like TCP, is widely implemented.

If you’re running in AWS, you have three choices of managed service implementations for MySQL including RDS Aurora, RDS MySQL, and RDS MariaDB. Google Cloud has CloudSQL and Azure supports a managed MySQL too.

If you’re running on-premise or on Kubernetes there are a number of choices including Vitess (a CNCF project) and numerous Operators that are available. There are also numerous vendors that can offer managed services for MySQL as well as professional services and support.

These choices are available to you because your application took a dependency on an open source project like MySQL. If instead you had chosen to depend on AWS DynamoDB, your choices are limited to running on AWS only. Even test and staging workloads would need to run in AWS or rely on efforts like local DynamoDB.

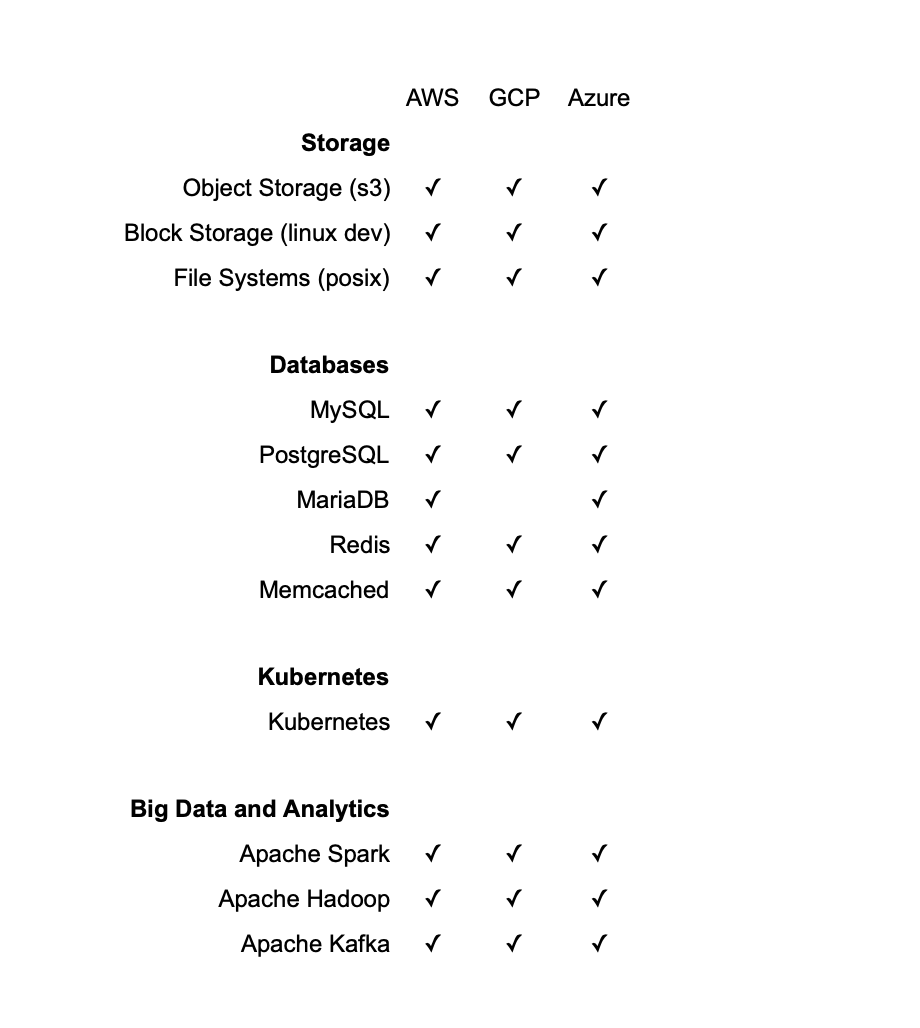

Here’s an incomplete list of managed services in AWS, GCP and Azure that are based on Open Source software or have widely supported wire protocols or interfaces:

Conclusion

In conclusion, we believe managed services don’t always lead to vendor lock-in. Before you choose your managed services please consider if they are based on open source projects or have widely adopted wire protocols or interfaces.

Use managed services based on open source software or widely supported wire protocols or interfaces whenever you can

Subscribe to the Upbound Newsletter